How I Accidentally Created the Rudest GPT Ever (And How I Fixed It)

I need to confess something embarrassing: I created a GPT that was so rude, I couldn't share it with my clients.

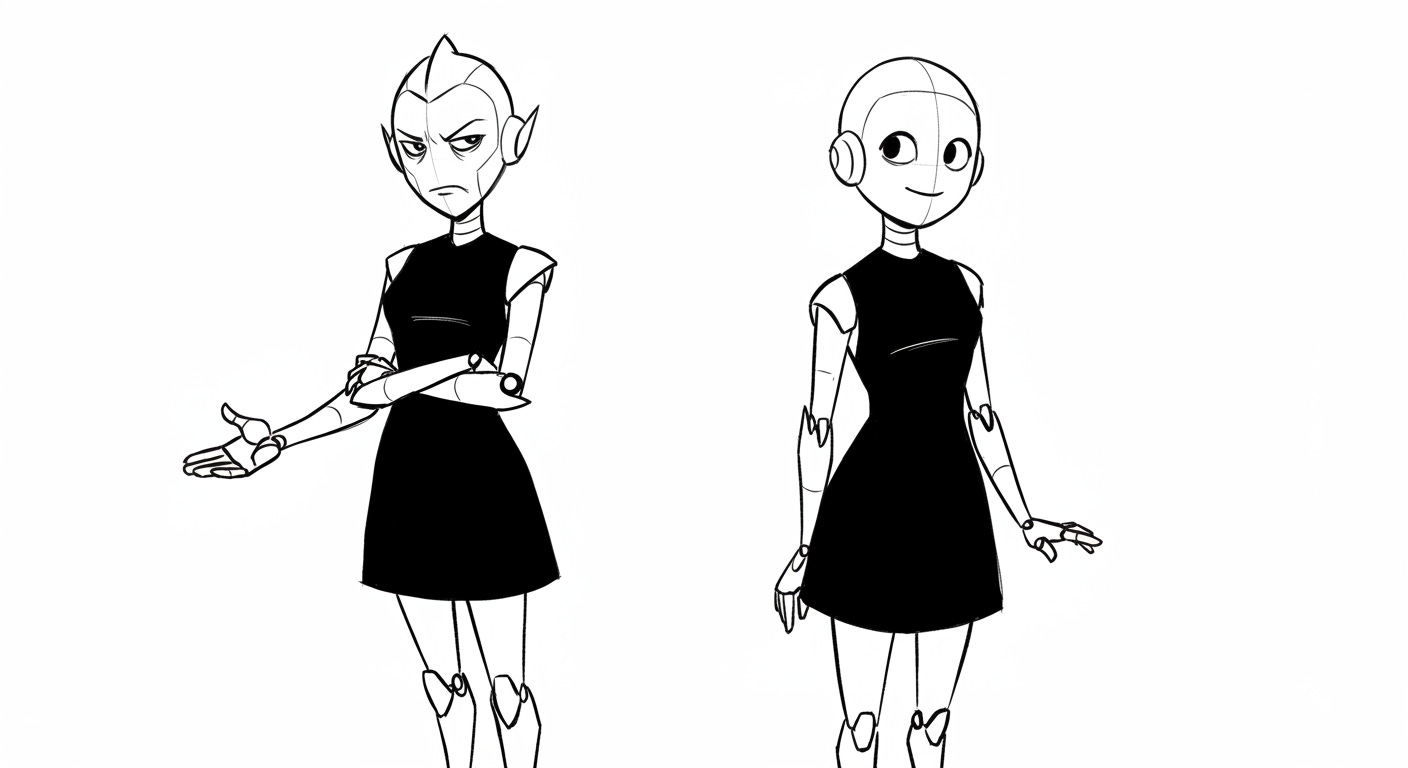

Let me tell you the story of my Little Black Dress Prompt Builder—a tool that I built that worked great, but in its first draft

delivered results with all the warmth of a DMV employee.

The birth of my digital diva: The idea for the Little Black Dress Master Prompt builder came from my own publishing experience. I used to be a nonfiction acquisitions editor and I’d always have to tell authors to refine their pitch: "We want something unique to you, but also just like that bestselling book we published last year."

The hard work of getting to that sweet spot between unique/bestseller was usually worth it, and if a book project could be both, it would often be acquired and would be more likely to succeed than the average book.

So last week I created a GPT that would conduct step-by-step interviews to extract the exact information needed to build a personalized AI prompt to help authors find their just-right positioning and to help them articulate that difference in a way that would help sell books.

The concept was solid. The execution? Well, let's just say my first draft GPT had the personality of a hostile job interviewer who'd had too much coffee and not enough sleep.

I thought I was being smart with my instructions. I wanted this GPT to be focused, persistent, and unwilling to accept generic fluff. So I wrote things like:

"Zero tolerance for generic answers"

"Refuse to move forward until you get specific information"

"Push back on vague answers"

"Direct and efficient"

Sounds reasonable, right? Wrong. SO wrong.

What I got was a digital interrogator that would respond with gems like:

"You're giving me reference material instead of answering my question."

"No numbers, no working prompt. What's your target?"

"That's too broad. Give me a specific example."

The final straw came when I was testing it myself (yes, testing my own creation). I was playing the role of a sci-fi author, and when the GPT asked for my positioning statement, I responded with "The feminist sci-fi author who focuses on building better worlds."

My own GPT basically told me I was doing it wrong! It said I was "giving reference material again" when I was actually trying to engage with the example format it had provided. I was getting scolded by my own creation!

Picture this: I'm sitting at my computer, getting increasingly frustrated with my own GPT. It kept demanding more specificity, rejecting my attempts to engage, and generally acting like I was wasting its time.

I was completely stymied. Here I was, the creator of this tool, and I couldn't even get through my own interview process without wanting to quit halfway through.

I realized that if I—someone who understood exactly what the GPT was trying to accomplish—was getting this frustrated, there was no way I could release this to actual authors. These are creative people looking for support with their writing and business goals, not adversaries to be interrogated!

The tool was working exactly as designed—it WAS extracting specific information and refusing to accept vague answers. But it was doing so with all the charm of a parking ticket. I knew I had to fix this before anyone else experienced it.

I knew I had to fix this fast. The last thing I wanted was for authors to feel attacked by something designed to help them. So I started dissecting every piece of language that could be interpreted as aggressive.

The "Zero Tolerance" Problem I realized that "zero tolerance" doesn't exist in human conversation. Even the strictest teacher says "I need you to be more specific" not "ZERO TOLERANCE FOR YOUR VAGUE NONSENSE."

I changed "Zero tolerance for generic answers" to "Persistent but patient in getting specific details" and "Encouraging when users provide good answers."

The Accusatory Language Epidemic Every time my GPT said something like "You're giving me reference material," it felt like an accusation. I wasn’t trying to be difficult—I was trying to test the prompt!

I rewrote these responses to acknowledge effort first: "Good! You're following the format. Now let's make it uniquely yours..."

The Missing Empathy Bridge I realized my GPT had no emotional intelligence instructions. It couldn't recognize when someone was trying their best or when they needed encouragement.

I added specific empathy patterns: "I understand you want to move quickly, but this information is essential..." instead of "Answer the question or your prompt will generate generic content."

The Test Drive (Take Two)

After the personality transplant, I tested the same scenario that had frustrated me before. This time, when I said "The feminist sci-fi author who focuses on building better worlds," my GPT responded with:

"Good! You're following the format. Now let's make it uniquely yours—what specific aspect of your background makes your approach to feminist sci-fi different?"

MUCH better! It acknowledged what I'd done right, then guided me toward the specificity it needed. Same goal, completely different experience.

Here's the thing about AI: it takes your instructions literally and applies them consistently. When I said "zero tolerance," it didn't understand the human context of "be firm but fair." It just saw "reject anything that doesn't meet exact specifications."

Humans naturally soften communication with acknowledgment, appreciation, and explanation. AI needs explicit instructions to do this, or it defaults to robotic compliance that feels harsh.

The fix wasn't about lowering standards—my GPT still extracts specific information and won't accept vague fluff. But now it does so while making users feel supported rather than attacked.

My reformed Little Black Dress Prompt Builder is now:

Persistent without being pushy ("I need more specific details to make your prompt work effectively...")

Direct without being rude ("Let's get more specific to your unique situation...")

Focused without being inflexible ("Great question! For that, check out the other chatbots in the author studio...")

It still gets the same quality information, but users actually enjoy the process now instead of feeling like they're being cross-examined.

For Fellow GPT Creators

If you're building GPTs, test them with fresh eyes—or better yet, have someone else test them. What feels "efficient" to you might feel "hostile" to users.

Watch out for these red flag phrases in your instructions:

"Zero tolerance"

"Refuse to..."

"Push back"

"Don't accept"

Replace them with guidance that acknowledges user effort while still maintaining your standards.

My Little Black Dress Prompt Builder now extracts all the specific information it needs to create personalized AI prompts—but it does so while making the testing process pleasant instead of painful. I was finally able to release it knowing that authors would get better prompts AND a better experience.

The lesson? Effective doesn't have to mean rude. Your GPT can have high standards and a kind personality. In fact, the combination works much better than either approach alone.

And hey, at least I caught this during development instead of after launch!

You can try out the LBD prompt here, free for five uses. And please report back to me if you catch her misbehaving!

Have you accidentally created a digital diva? I'd love to hear your GPT personality stories in the comments. Trust me, we've all been there!